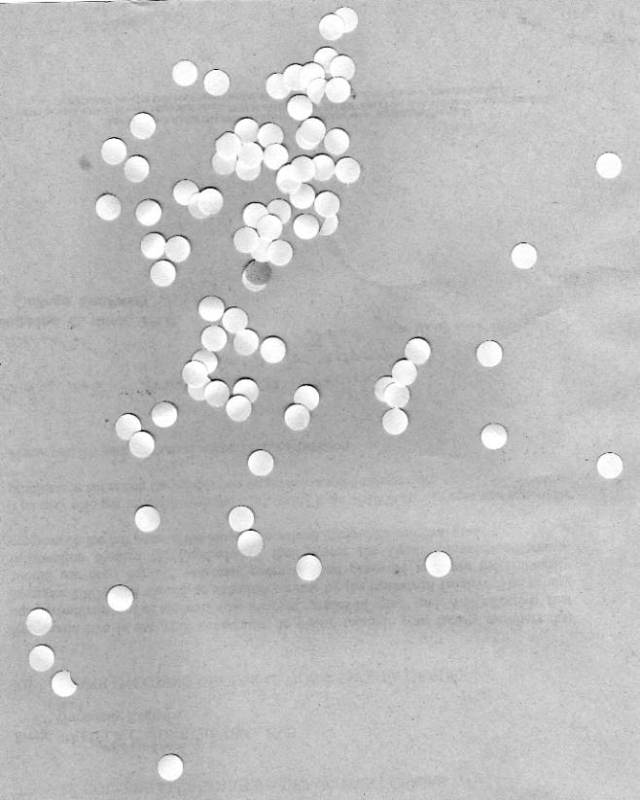

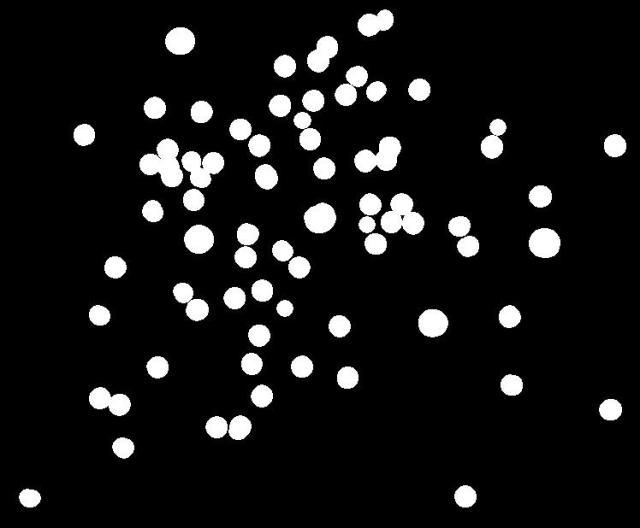

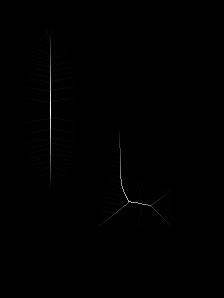

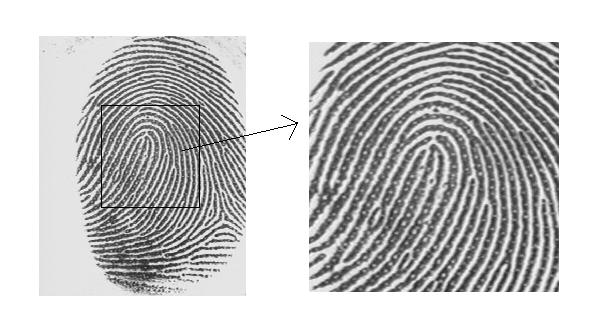

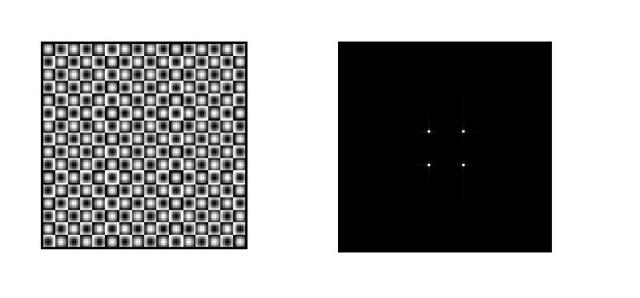

In this activity, which was really lengthy but enjoyable in a way, a simple musical piece is selected and by applying image processing to it scilab will be able to know the tune and the tempo of every note on the staff. The song i selected was timely and it is entitled “Jingle Bells”. I only cropped the chorus part of the song. The image is shown.

Part of the Jingle Bells chorus which will be played in scilab

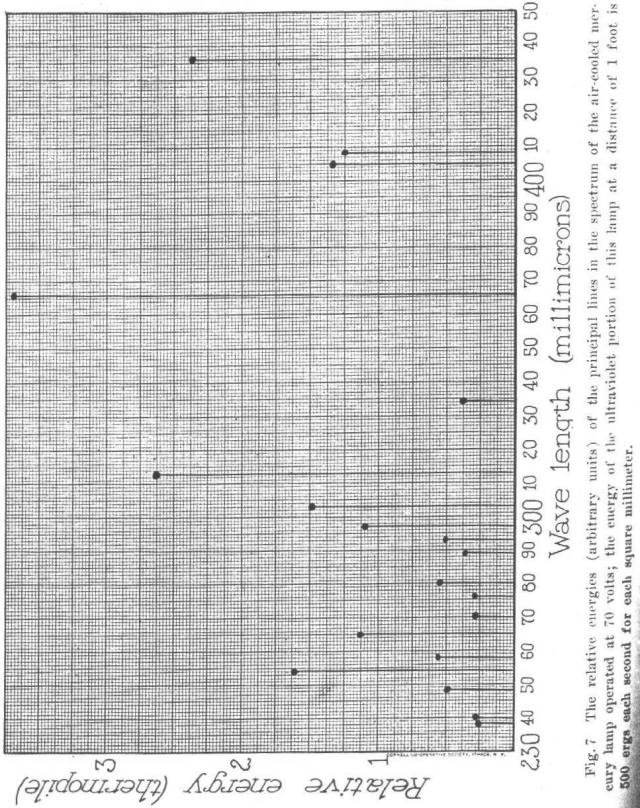

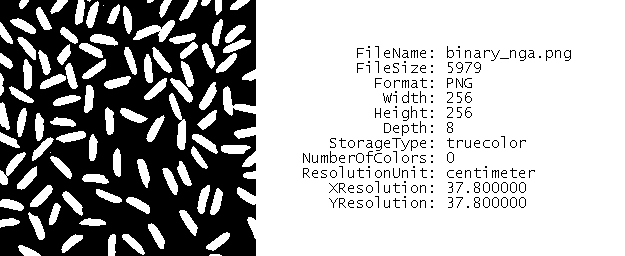

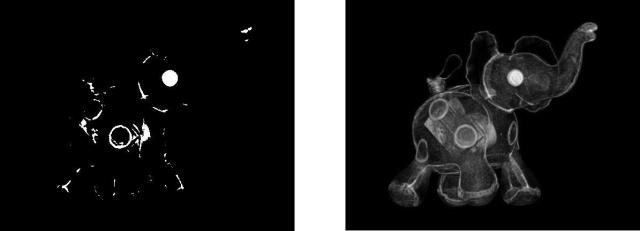

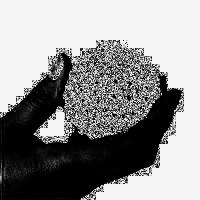

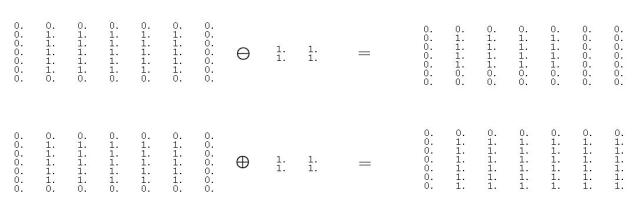

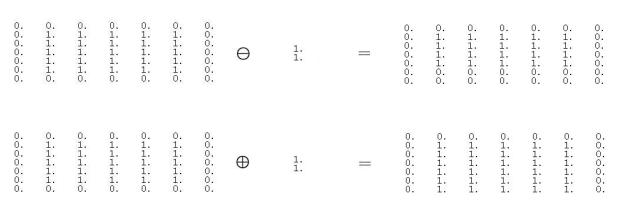

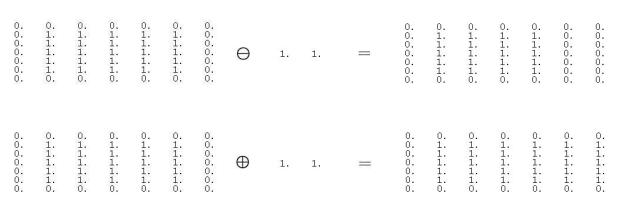

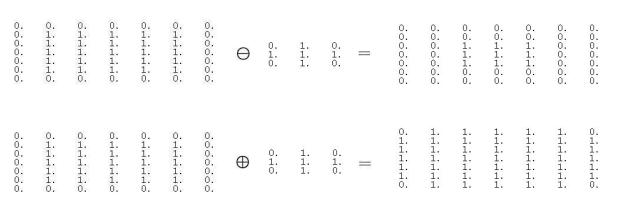

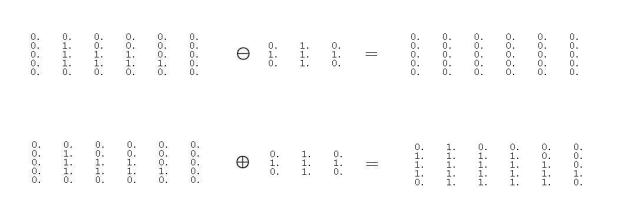

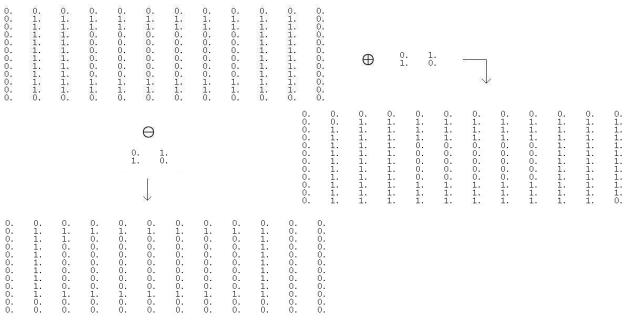

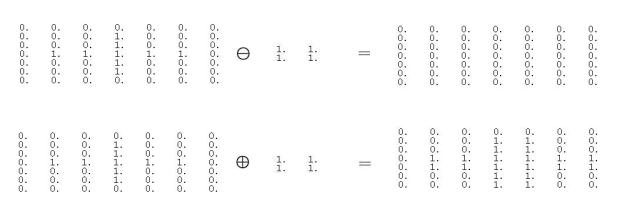

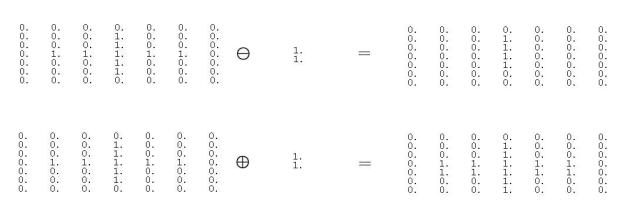

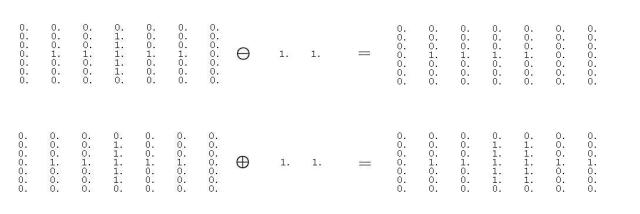

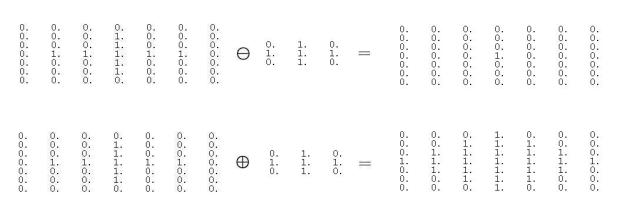

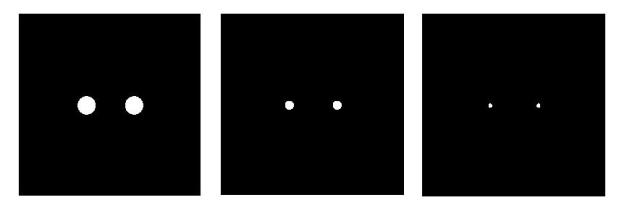

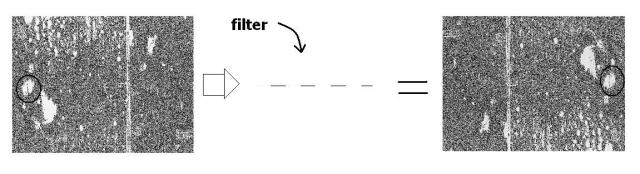

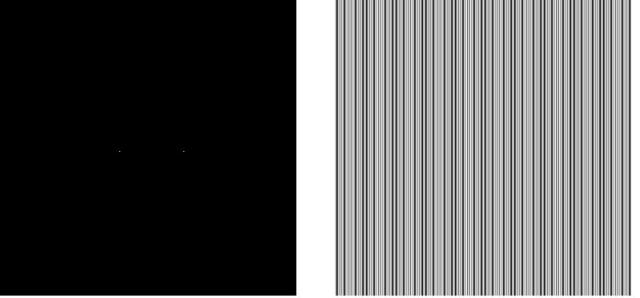

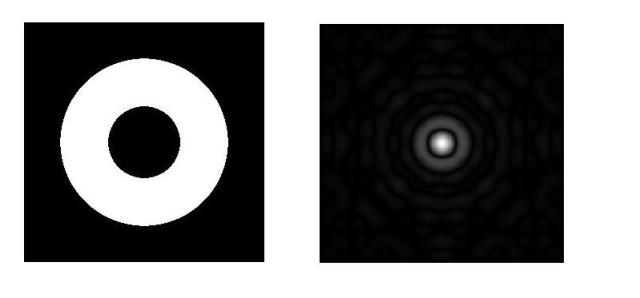

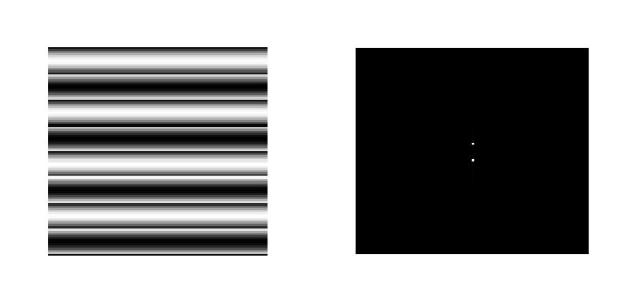

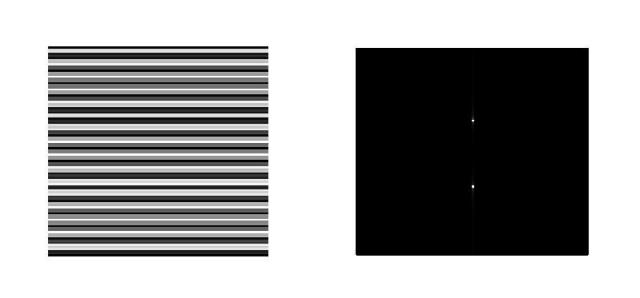

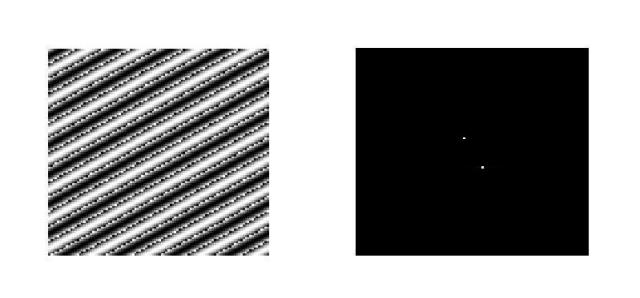

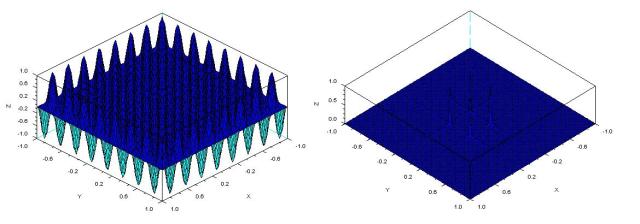

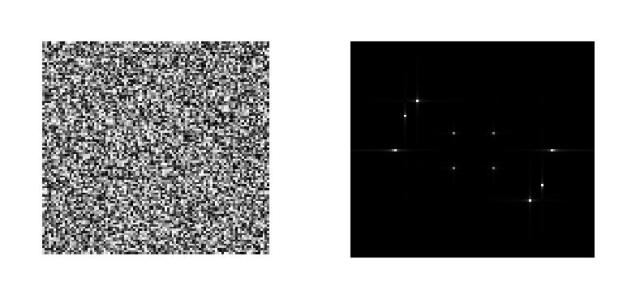

The first thing i did here was to get the binarized version of the image above and thresholding it to 0.75 of grascale value so that each note is still distinguishable. Next, i cropped every type of note in the piece like half note & quarter note. Then, using the correlation function from the previous activity I was able to find where will each kind of note will be found in the staff. Then I binarized each of the correlated image so that a single pixel will appear for every corresponding note. And before adding up all those images in a single image, I first distinguished every type of note from the rest by asigning a numerical value to it. I already proportioned its number with its timing i.e. for half note is 4, for quarter its 2 etc. Now, i summed all of them to produce a single image presenting in the same order of notes the relative tempo of every note in the staff. Code is presented below:

//GETTING THE TEMPO OF THE NOTES

quarter_note = imread(‘D:\academic documents\1st sem AY 10-11\AP 186\Activity_11\quarter_note.jpg’);

quarter_note = im2bw(quarter_note,0.75);

//imshow(quarter_note);

conj_fft_jingle = conj(fft2(jingle_bells));

fft_quarter = fft2(quarter_note);

convlv_in_fft1 = conj_fft_jingle.*fft_quarter;

correlation1 = abs(fftshift(fft2(convlv_in_fft1)));

correlation1 = (correlation1-min(correlation1))/(max(correlation1)-min(correlation1));

//imshow(correlation);

//histplot(100,correlation);

T_new1 = im2bw(correlation1,0.9);

//imshow(T_new1);

imwrite(T_new1,’correlation_with_quarter.jpg’)

half_note = imread(‘D:\academic documents\1st sem AY 10-11\AP 186\Activity_11\half_note.jpg’);

half_note = im2bw(half_note,0.75);

fft_half = fft2(half_note);

convlv_in_fft2 = conj_fft_jingle.*fft_half;

correlation2 = abs(fftshift(fft2(convlv_in_fft2)));

correlation2 = (correlation2-min(correlation2))/(max(correlation2)-min(correlation2));

//imshow(correlation);

//histplot(100,correlation);

T_new2 = im2bw(correlation2,1.0);

//imshow(T_new2);

imwrite(T_new2,’correlation_with_half.jpg’)

eighth_note = imread(‘D:\academic documents\1st sem AY 10-11\AP 186\Activity_11\eighth_note.jpg’);

eighth_note = im2bw(eighth_note,0.75);

fft_eighth = fft2(eighth_note);

convlv_in_fft3 = conj_fft_jingle.*fft_eighth;

correlation3 = abs(fftshift(fft2(convlv_in_fft3)));

correlation3 = (correlation3-min(correlation3))/(max(correlation3)-min(correlation3));

//imshow(correlation);

//histplot(100,correlation);

T_new3 = im2bw(correlation3,1.0);

//imshow(T_new3);

imwrite(T_new3,’correlation_with_eighth.jpg’)

half_eighth_note = imread(‘D:\academic documents\1st sem AY 10-11\AP 186\Activity_11\half+eighth_note.jpg’);

half_eighth_note = im2bw(half_eighth_note,0.75);

fft_half_eighth = fft2(half_eighth_note);

convlv_in_fft4 = conj_fft_jingle.*fft_half_eighth;

correlation4 = abs(fftshift(fft2(convlv_in_fft4)));

correlation4 = (correlation4-min(correlation4))/(max(correlation4)-min(correlation4));

//imshow(correlation);

//histplot(100,correlation);

T_new4 = im2bw(correlation4,1.0);

//imshow(T_new4);

imwrite(T_new4,’correlation_with_quarter&eighth.jpg’)

half_quarter_note = imread(‘D:\academic documents\1st sem AY 10-11\AP 186\Activity_11\half+quarter_note.jpg’);

half_quarter_note = im2bw(half_quarter_note,0.75);

fft_half_quarter = fft2(half_quarter_note);

convlv_in_fft5 = conj_fft_jingle.*fft_half_quarter;

correlation5 = abs(fftshift(fft2(convlv_in_fft5)));

correlation5 = (correlation5-min(correlation5))/(max(correlation5)-min(correlation5));

//imshow(correlation);

//histplot(100,correlation);

T_new5 = im2bw(correlation5,1.0);

//imshow(T_new5);

imwrite(T_new5,’correlation_with_half&quarter.jpg’)

//PUTTING TEMPO TO THE PIECE

jingle_bells_quarter = imread(‘D:\academic documents\1st sem AY 10-11\AP 186\Activity_11\correlation_with_quarter.jpg’);

jingle_bells_quarter = (jingle_bells_quarter – min(jingle_bells_quarter))/(max(jingle_bells_quarter)-min(jingle_bells_quarter));

jingle_bells_quarter = im2bw(jingle_bells_quarter,0.9);

//y = tabul(jingle_bells_quarter);

//imshow(jingle_bells_quarter);

jingle_bells_quarter = 2*jingle_bells_quarter;

jingle_bells_half = imread(‘D:\academic documents\1st sem AY 10-11\AP 186\Activity_11\correlation_with_half.jpg’);

jingle_bells_half = (jingle_bells_half – min(jingle_bells_half))/(max(jingle_bells_half)-min(jingle_bells_half));

jingle_bells_half = im2bw(jingle_bells_half,0.9);

//y = tabul(jingle_bells_half);

jingle_bells_half = 4*jingle_bells_half;

jingle_bells_eighth = imread(‘D:\academic documents\1st sem AY 10-11\AP 186\Activity_11\correlation_with_eighth.jpg’);

jingle_bells_eighth = (jingle_bells_eighth – min(jingle_bells_eighth))/(max(jingle_bells_eighth)-min(jingle_bells_eighth));

jingle_bells_eighth = im2bw(jingle_bells_eighth,0.9);

//y = tabul(jingle_bells_eighth);

//imshow(jingle_bells_eighth);

jingle_bells_eighth = 1*jingle_bells_eighth;

jingle_bells_half_q = imread(‘D:\academic documents\1st sem AY 10-11\AP 186\Activity_11\correlation_with_half&quarter.jpg’);

jingle_bells_half_q = (jingle_bells_half_q – min(jingle_bells_half_q))/(max(jingle_bells_half_q)-min(jingle_bells_half_q));

jingle_bells_half_q = im2bw(jingle_bells_half_q,0.9);

//y = tabul(jingle_bells_half_q);

jingle_bells_half_q = 6*jingle_bells_half_q;

jingle_bells_quarter_e = imread(‘D:\academic documents\1st sem AY 10-11\AP 186\Activity_11\correlation_with_quarter&eighth.jpg’);

jingle_bells_quarter_e = (jingle_bells_quarter_e – min(jingle_bells_quarter_e))/(max(jingle_bells_quarter_e)-min(jingle_bells_quarter_e));

jingle_bells_quarter_e = im2bw(jingle_bells_quarter_e,0.9);

//y = tabul(jingle_bells_quarter_e);

jingle_bells_quarter_e = 3*jingle_bells_quarter_e;

new_jingle_bells = jingle_bells_quarter + jingle_bells_half + jingle_bells_eighth + jingle_bells_half_q + jingle_bells_quarter_e;

//t = tabul(new_jingle_bells);

//imshow(new_jingle_bells);

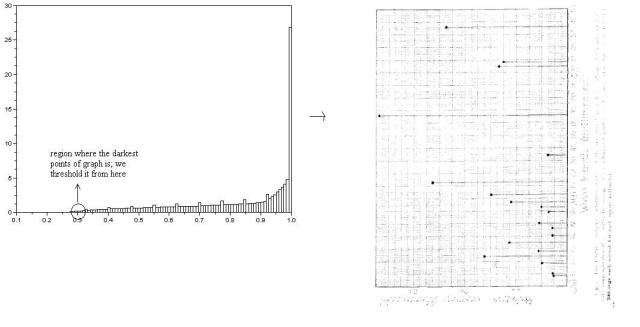

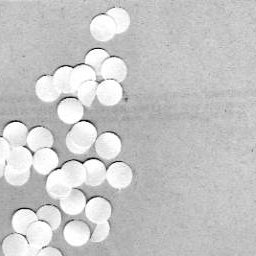

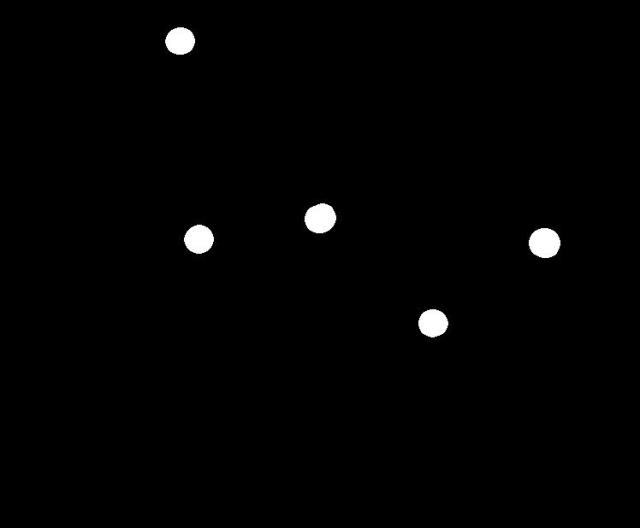

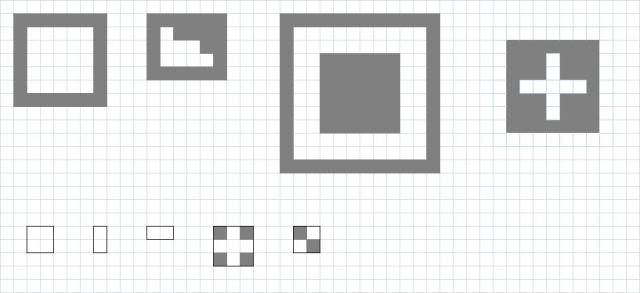

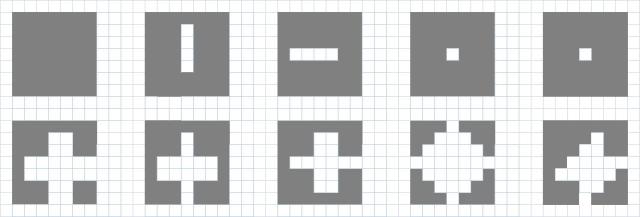

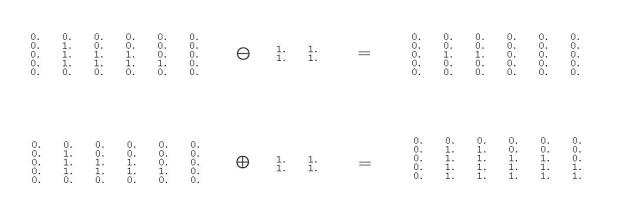

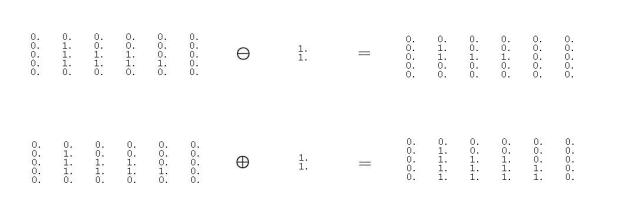

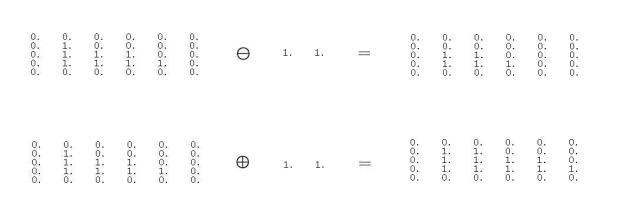

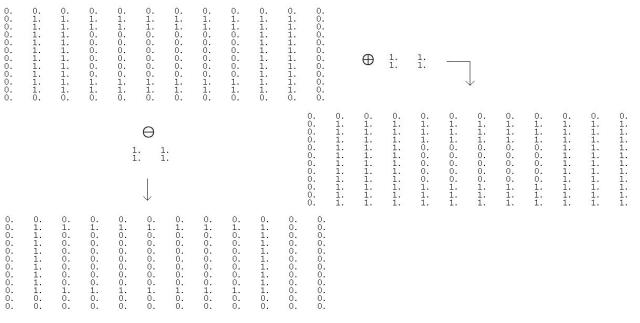

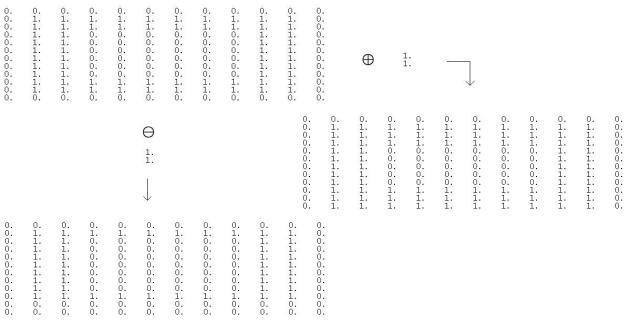

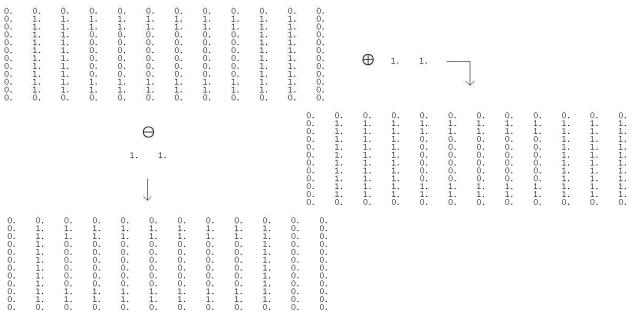

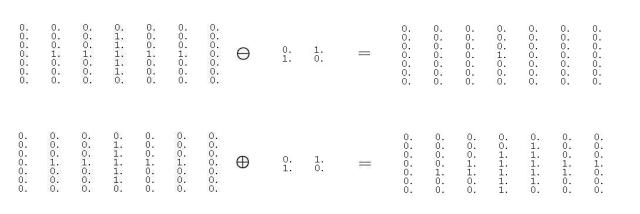

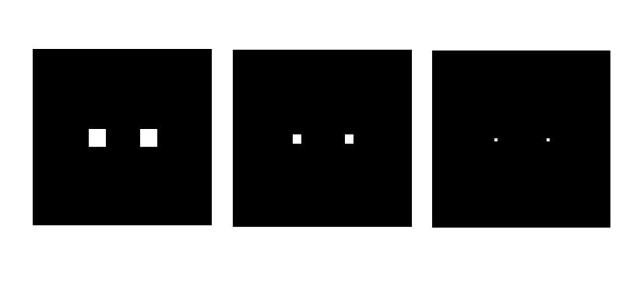

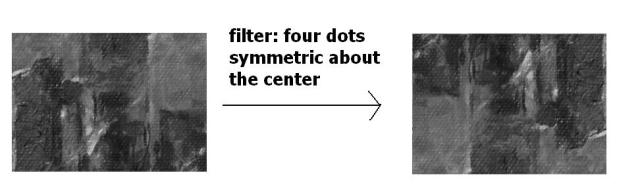

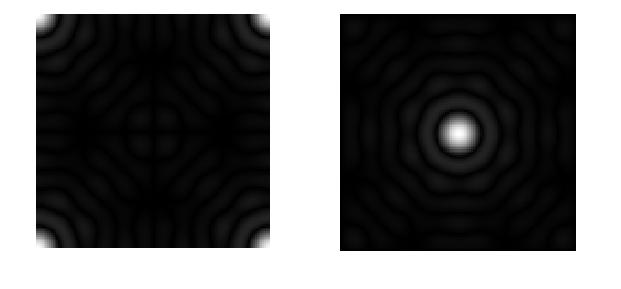

Now, the next problem was to find the corresponding tune for every note and this can be done by finding the height/column correspondence to every note in the staff. However, our last image was only for tempo information since the peaks of the correlation do not always dwell on the middle part of the oblong head of the note and it depends on the shape of the entire note i.e. including the tail, the flags and dots beside it. So, a different approach is required. What i did was to create different sizes of oblongs in paint and used morphological closing and opening as well as twice erosion process in order to remove hollow oblongs from half note, tails and flags from lower tempo notes as well as the lines of the staff and to reduce the oblong heads into few pixels. I find the correspondence of column value to the conventional positions of tunes of notes on the staff. Code is presented:

//FOR DETERMINING THE TUNE

jingle_bells = imread(‘D:\academic documents\1st sem AY 10-11\AP 186\Activity_11\jingle_bells_chorus_inverted.jpg’);

jingle_bells = im2bw(jingle_bells,0.75);

//imshow(jingle_bells);

oval_head = imread(‘D:\academic documents\1st sem AY 10-11\AP 186\Activity_11\structuring_element1.jpg’);

oval_head = im2bw(oval_head,0.75);

oval_head1 = imread(‘D:\academic documents\1st sem AY 10-11\AP 186\Activity_11\structuring_element.jpg’);

oval_head1 = im2bw(oval_head1,0.75);

oval_head2 = imread(‘D:\academic documents\1st sem AY 10-11\AP 186\Activity_11\structuring_element2.jpg’);

oval_head2 = im2bw(oval_head2,0.75);

tune1 = erode(dilate(jingle_bells,oval_head2),oval_head2);

//imshow(tune);scf();

tune2 = dilate(erode(tune1,oval_head1),oval_head1);

//imshow(tune2);

tune3 = erode(erode(tune2,oval_head1),oval_head2);

//imshow(tune3);

tune4 = bwlabel(tune3);

tune = zeros(1,11);

pos = zeros(1,11);

for i=1:11,

[r,c] = find(tune4==i);

tune(1,i) = min(r);

pos(1,i) = min(c);

end;

Q = sort(pos);

tune_final = zeros(1,11);

for j=1:11,

tune_final(1,12-j) = tune(1,find(pos==Q(j)));

end;

function n = note(f,t)

n = sin(2*%pi*f*t);

endfunction;

C = 261.63*2;

D = 293.66*2;

E = 329.63*2;

F_sharp = 369.99*2;

G = 392.00*2;

A = 440.00*2;

B = 493.88*2;

C2 = 523.25*2;

D2 = 587.33*2;

E2 = 659.26*2;

tune_list = zeros(1,11);

for m=1:11,

if tune_final(1,m)==26 then tune_list(1,m)=B;

elseif tune_final(1,m)==18 then tune_list(1,m) =D2;

elseif tune_final(1,m)==34 then tune_list(1,m) =G;

else tune_list(1,m) =A;

end,

end;

And, finally, the note is played using note function introduced in the code.

Actually, it took me more than a week to finish this activity and I might say that it was worth the time and justice was given to the activity. I would give myself a 10 for this one. And hey, it remained a challenge for me to add reading rests to my code and doing harmonics!

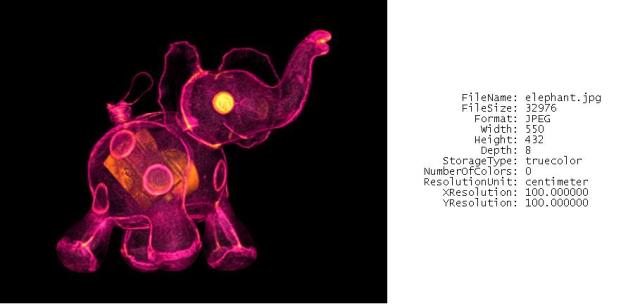

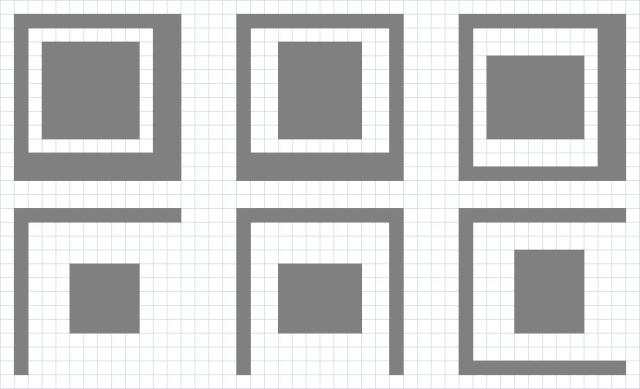

So at this point, the last three images will be our concern. Using the White Patch algorithm wherein i chose a small part of the background which is supposedly a white bond paper then arbitrarily choose a point on it that will serve as the divisor for the pixel values of R,G,B of the image. After applying a scaling factor differently for each layer of R,G and B, I clipped the maximum value to be 1 by saturating those which exceeds one. The images below is the result of the first algorithm applied to the last three images above in the same order.

So at this point, the last three images will be our concern. Using the White Patch algorithm wherein i chose a small part of the background which is supposedly a white bond paper then arbitrarily choose a point on it that will serve as the divisor for the pixel values of R,G,B of the image. After applying a scaling factor differently for each layer of R,G and B, I clipped the maximum value to be 1 by saturating those which exceeds one. The images below is the result of the first algorithm applied to the last three images above in the same order.

We can observe the difference in quality of the balanced image, nevertheless, it significantly improved as compared to its original form above.

We can observe the difference in quality of the balanced image, nevertheless, it significantly improved as compared to its original form above.

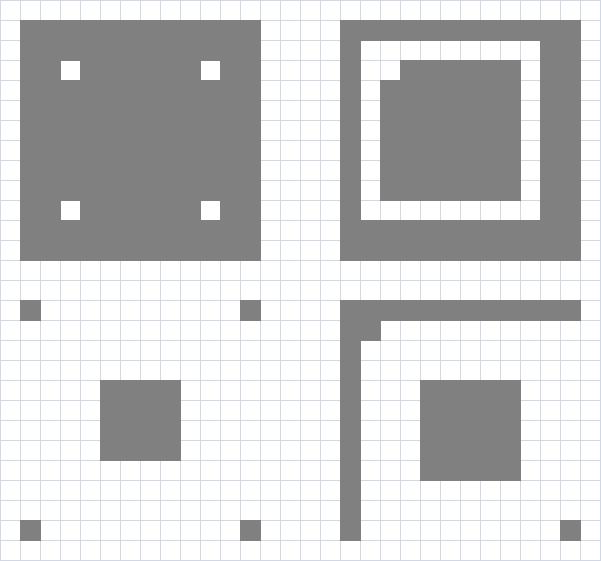

There is not much difference in the quality of the balanced images for three different WB camera modes. However, we can see that the new image is like exposed to a higher intensity light source. There is not much difference in rendered colors especially the white background unlike the first algorithm where there is much distinction between the white backgrounds.

There is not much difference in the quality of the balanced images for three different WB camera modes. However, we can see that the new image is like exposed to a higher intensity light source. There is not much difference in rendered colors especially the white background unlike the first algorithm where there is much distinction between the white backgrounds.

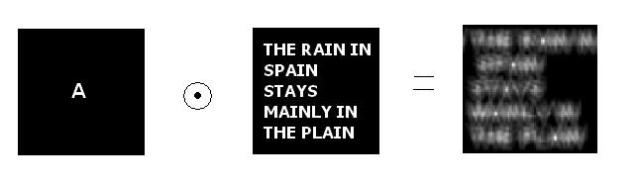

Letter A created in Paint

Letter A created in Paint